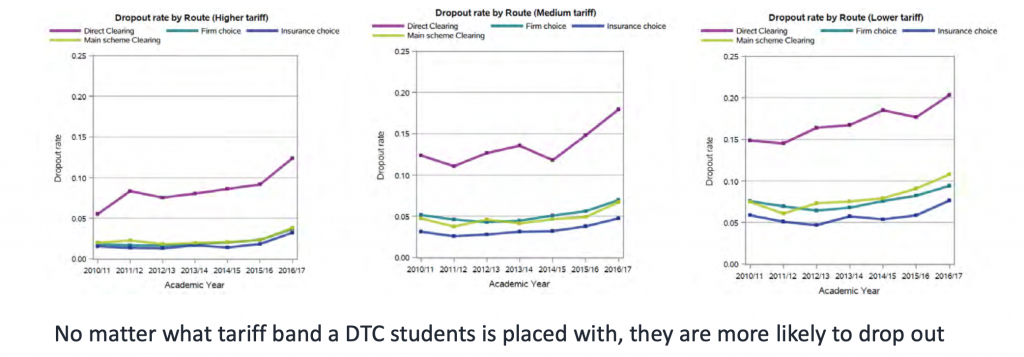

This slide, presented by UCAS’s Chief Executive Clare Marchant at a recent event hosted by Wonkhe on higher education admissions, shows starkly the correlation between clearing and drop-out that I have been banging on about since the 1990s.

In actual fact, it doesn’t quite show that.

What it does show is a clear link between those students who drop-out and those students who arrive at university specifically through the ‘direct to clearing’ (DTC) route. This is an unusual pathway, often used by students other than your typical decent-grades-18-year-old school-leaver. So there would be nothing surprising if their outcomes in terms of drop-out weren’t the same as other students. In other words, it is conceivable that the correlation between clearing and drop-out is peculiar to (or more pronounced among) DTC applicants.

I don’t think so and I have good evidence for thinking otherwise. For several years around the turn of the millennium, Push published data showing that what we called ‘flunk rates’ (the percentage who drop-out or fail) and the proportion of students that each HEI admitted through clearing (using data that the universities themselves supplied). The two datasets had a correlation coefficient of 0.91 – in other words, they were close to identical lists.

The media was understandably very interested and I did the media rounds trying to let the figures speak for themselves.

Most universities, even those who had provided their clearing data to Push, dismissed or denied any meaningful link. There were some notable exceptions – vice-chancellors who, rather than blame the messenger, recognised that there may be a problem here.

All I was trying to say was that the data suggested that hasty choices might lead to regret and students without their hoped-for grades should be cautious if looking for clearing options and should consider reapplying instead.

Meanwhile, UCAS itself was also disputing the connection, promoting the line that clearing was the best way to get a university place if you hadn’t made your grades. To be fair, they were relying on data that was even less complete than mine.

Push had surveyed the universities themselves, asking them to self-declare the proportion accepted through clearing. Around two-thirds responded and the numbers that were being reported to us were, on average about 75% higher than UCAS’s data suggested. Bearing in mind that one might imagine that those universities with the highest clearing rates might be the least likely to share their data, it appeared that the official clearing process was recording perhaps less than half the numbers accepted through that route.

What’s more, the proportion entering through clearing appears to have grown since then (as student numbers have continued to rise), although even that growth may merely be the true scale of clearing being more accurately recorded. Even now though, there is what Mark Corver (DataHE‘s admissions number-cruncher extraordinaire) calls a “twilight zone of UCAS data” – the RPAs or ‘record of prior acceptance’ students – and that number is also growing. If the number of students entering through clearing really is rising, it may mean the proportion of students who end up dropping out will rise too.

At this point, let me make it absolutely clear: correlation is not causation and I’m not claiming it is.

It is perfectly possible that arriving through clearing is not the reason why students drop out. Indeed, I’d go so far as to say that, even if it is a reason, it is not the only one. Maybe, for example, clearing gets you into universities whose drop-out rate is higher for an unrelated reason; maybe those who more likely to drop-out are more likely to opt to enter through clearing; or maybe clearing and drop-out share a separate unconnected cause, such as being less well advised.

That said, it didn’t take a genius to see that rash choices were being made by students and universities alike and that there were (and still are) a lot of poor matches arising from the chaos of clearing.

This has really important repercussions as we consider switching to a system of post-qualifications admissions (PQA).

Drop-out is only ever the tip of the iceberg. Most students battle on regardless. After all, let’s remember what drop-out means: you’ve got the student debts, you’ve probably blown your chance of being state-funded throughout a degree, and yet you’ve got nothing to show for it. Worse that that, you have a black hole in your CV which employers might (unfairly) look on as a mark of failure.

Behind the drop-out data, there are thousands of stories of hopes shattered and opportunities dashed. And for every person that drops out, there are many more for whom higher education has been so much less than it could or should have been.

That’s why the admissions system must deliver good matches between students and unis.

It is also why the Department for Education’s unequivocal support for PQA is ill-thought out. The last time the government was beguiled into thinking PQA was a good idea (in 2011), at least they had the sense to announce an investigation first rather than preempting any consideration of the practicalities. This time, the Secretary of State announced a consultation would be launched in advance of the introduction of PQA (betraying either a misunderstanding or contempt for the point of consultations).

PQA does look very attractive in principle because it is assumed to mean an end to predicted grades and clearing. However, in practice it probably means an end to neither – and, while failing to make anything better, it might make other matters far worse.

Teachers would still need to use predicted grades to ‘guide’ students to consider applying to HEIs that might accept them – which would need to be done in advance of actual grades so that students could visit them in order to make an informed choice.

While that means HEIs would not be using predictions to make offers, students would still be using them to make applications. The supposed unfairness and lack of reliability of predictions would still be a big factor, but they’d be even less transparent and harder to mitigate. In any case, as Mark Corver (again) has effectively argued, predicted grades are perhaps no less imperfect that actual grades and any bias may not be quite as Gill Wyness, among others, has argued.

Furthermore, unless you shift the date that grades are published and/or the academic year start by months, you’d be compressing application activity into a matter of a few weeks. In other words, rather than no clearing, everyone would be in clearing.

Not only would clearing have to sort about eight times as many applicants, but they would have to go through the whole application system without the support and guidance of their schools and colleges which, by then, the students would have left.

There are ways that PQA could be made to work (I’ve written on this blog about this), but, unsurprisingly, it’s neither as simple nor as attractive as Gavin Williamson’s announcement seemed to assume it to be. It would take more far more fundamental and far-reaching changes to post-16 education. (We can chalk this up to the long list of reasons why radical reform might be a good idea, even though no government is ever likely to grasp those nettles and use them to make nettle pyjamas.)

DfE imagines that what needs fixing about admissions is the unconditional offers and unreliability of predictions. In fact, the more serious problems are those connected with poor choices about what and where to study. These build higher hidden hurdles for the disadvantaged. All applicants need to be able to make well informed, supported choices over time.

Please follow and like us: